I have two big changes to announce!

The first is that I’ve recently founded my next company: BlueLine Game Studios. It’s been a long time coming and I was really looking forward to the chance to work with Geoff Brown again. After the marathon in September – with all of the extra time from not training anymore – it only took a few days before my restlessness had me thinking over all of the things I’d been studying about Indie Gaming for the last several years.

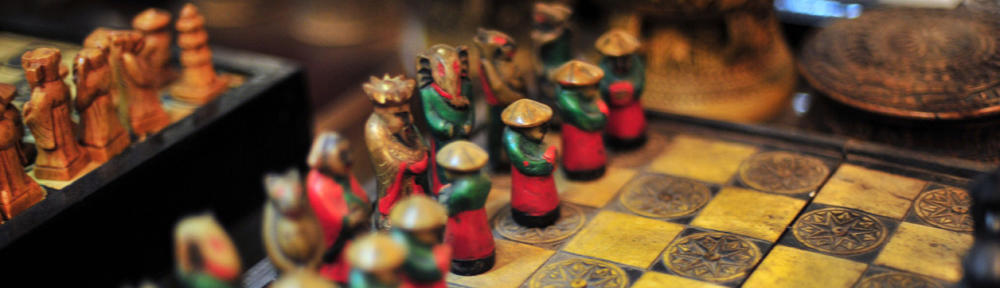

It seems almost inevitable that I would start a gaming company someday and it was a no-brainer that Geoff would be the first man to pull on-board if I could get him. At this point, urologist I just didn’t have anymore excuses to delay it. I jumped in and contacted the developers of the best boardgames I could find (mostly Mensa Select winners) to discuss licensing. Much to my surprise they were all very responsive, price seemed eager to work together, and moved fast. Before I knew it, we had a licensing deal signed to bring one of the most awesome boardgames ever to Xbox 360!

Since then, we signed a deal with another boardgame (to be announced soon ;)). They were seriously the #1 and #2 boardgames that I wanted to make for Xbox 360!

Things have been going quite well. …and fast! Which brings me to my second big change:

Starting Dec. 12th, I’ll be cutting back to 3 days per week at Wikia. I’ve been working on LyricWiki since early 2006. A few months after Wikia acquired LyricWiki, they pulled me in to head up LyricWiki work again and help with development in general. It’s been a great couple of years in which I’ve met some amazing people, but it’s time for me to sew some other seeds also. Furthermore, Wikia is in a great spot and can certainly survive without my fulltime attention ;). Just this morning I noticed that according to Quantcast, Wikia is the 40th biggest site/group-of-sites on the internet (in terms of monthly US uniques). That’s awesome. When I joined, most people I met didn’t know the name Wikia. Two years later we’re starting to become a household name and we have more traffic than MySpace! It’s certainly been an exciting ride so-far.

Better yet, since I’m not even leaving (just cutting back) I can do my part to continue to help Wikia grow to its next big milestones.

I’m looking forward to the adventures in store with this new arrangement and am happy to be back “in the startup arena”*.

If you think this sounds intriguing at all, please follow BlueLine Games on twitter, facebook, google+, it’s blog, or signup to be notified when Hive for Xbox 360 goes live!

* Wikia is so profitable & has so many employees that it’s hard to call it a startup. So much job security… ugh! ;)

I checked back and they’ve been doing this for months. I checked the documentation that’s linked to from that “Fees” line, and long-story-short: it’s a hidden fee affecting “

I checked back and they’ve been doing this for months. I checked the documentation that’s linked to from that “Fees” line, and long-story-short: it’s a hidden fee affecting “